On the Role of Static Analysis in Operating System Checking and Runtime Verification

A RESEARCH PROFICIENCY EXAM PRESENTED

BY

ABHISHEK RAI

STONY BROOK UNIVERSITY

Technical Report FSL-05-01

May 2005

Abstract of the RPE

On the Role of Static Analysis in Operating System Checking and

Runtime Verification

by

Abhishek Rai

Stony Brook University

2005

Software inevitably contains bugs. For certain classes of software

like operating systems, reliability is a critical requirement. Recent

research has shown that several commodity operating systems, even

after careful design and extensive testing, still contain a number of

bugs.

Methods for automated detection of bugs in software can be classified

into (1) static methods, (2) formal verification, and (3) runtime

checking.

In this paper, our focus is on verifying critical properties of large

and complex software like OSs, one such property being correctness of

memory accesses. For this purpose, we evaluate both static checking

techniques and runtime checking techniques, and present our

observations.

Static checking is useful because the cost of repairing a bug

increases along the software development lifetime. Bugs

discovered and fixed early result in significant cost savings.

We evaluate a representative set of existing static checking techniques

on the basis of soundness, precision, and usefulness in checking

large software like the Linux kernel.

We also cover the related problem of deriving property rules

for automated checking.

Static checking though useful, cannot in general catch all possible

bugs in C programs, without producing false alarms. Consequently,

it is mostly a best-effort exercise to find some, but not

all bugs.

However, memory access checking is too critical to be trusted to

static techniques. We introduce a survey of existing bounds checking

techniques. In particular, we show that it is possible to classify

the wide range of existing bounds checking techniques into a single

hierarchy. We evaluated existing techniques based on

soundness, performance, and usability.

A software bounds checking framework for the Linux kernel is

introduced and evaluated.

We observe that existing static checking methods have limited

coverage in detecting bugs. For verifying the correctness of critical

program properties like memory accesses, runtime checking is

necessary. However, runtime methods by themselves are not very useful

due to performance reasons. Hence, we conclude advocating static

analysis for use in runtime checking systems.

To my family

Contents

1 Introduction

2 Overview

2.1 Why Is C Not Enough

2.1.1 Types of Bugs

2.1.2 The Nature of Software Bugs

2.2 Bug Detection Techniques

2.2.1 Formal Verification

2.2.2 Runtime Checking

2.2.3 Static Checking

2.2.4 Issues and Trade-Offs in Static Checking

3 Static Analysis Survey

3.1 Annotation-Based Techniques

3.1.1 Lint

3.1.2 LCLint

3.1.3 Splint

3.1.4 Extended Static Checking

3.1.5 Cqual

3.2 Tool-Based Techniques

3.2.1 PREfix

3.2.2 ESP

3.2.3 Abstract Interpretation: ASTREE

3.2.4 SLAM

3.2.5 Metacompilation

3.3 Language-Based Approaches

3.3.1 Cyclone

3.3.2 Vault

3.3.3 Comparison of Cyclone and Vault

3.3.4 MOPS

4 Automated Deduction of Checking Rules

4.1 The Need for Automated Deduction

4.2 Invariant Inference using Predicate Abstraction

4.2.1 Inferring Loop Invariants

4.2.2 Predicate Abstraction

4.3 Invariant Inference for Annotation Based Checkers: Houdini

4.4 Runtime Methods for Inferring Invariants

4.5 Bugs as Deviant Behavior: Combining Static Analysis and Invariant Inference

4.6 Extracting Finite State Models from Code

4.6.1 Metal Based System for Flash

5 Case Study: Memory Bounds Checking

5.1 The Nature of Memory Bugs

5.2 Static Methods for Detecting Memory Bugs

5.2.1 Archer

5.3 Runtime Checking for Detecting Memory Bugs

5.3.1 Purify

5.3.2 Safe-C

5.3.3 Decoupling Computation and Bounds Checking

5.3.4 Backward-Compatible Extensions to Safe-C

5.3.5 Cash: Hardware Assisted Bounds Checking

5.4 Bounds Checking for the Linux kernel

5.4.1 KBCC

5.4.2 Mudflap

5.4.3 Kernel Code Electric Fence

5.5 Performance Evaluation

5.5.1 Kefence

5.5.2 KBCC

6 Static Analysis as a Means for Facilitating Runtime Checking

6.1 Static Analysis for Bounds Checking

6.1.1 CCured

6.1.2 Static Analysis in BCC

6.2 Static Analysis for Instrumentation of Code

6.2.1 Aspect-Oriented Programming

6.2.2 Source Code Instrumentation in Aristotle

7 Conclusions and Future Work

7.1 Static Checking

7.2 Runtime Bounds Checking

7.3 Future Work

List of Figures

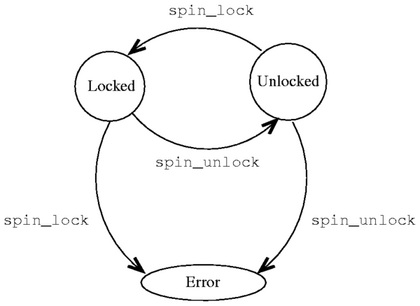

2.1 Example of an Application Level Protocol that should be satisfied in program code

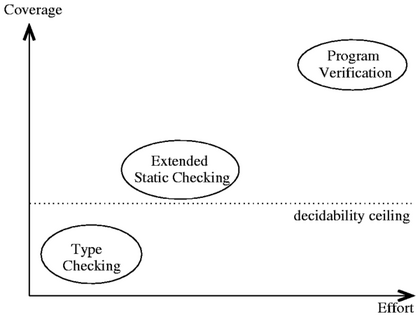

3.1 Static checkers plotted along the two dimensions coverage and effort

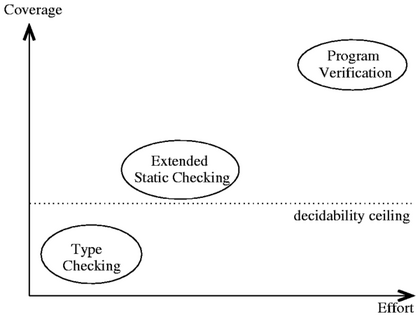

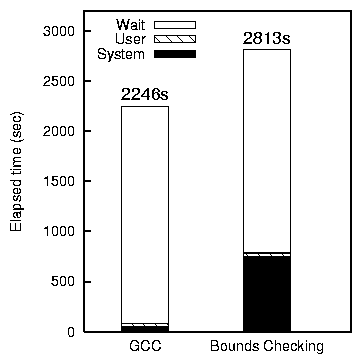

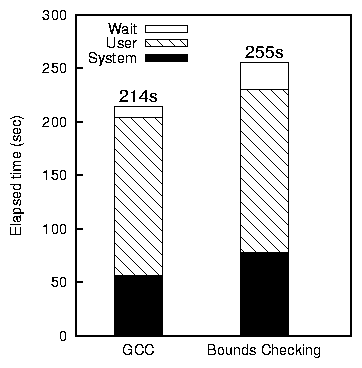

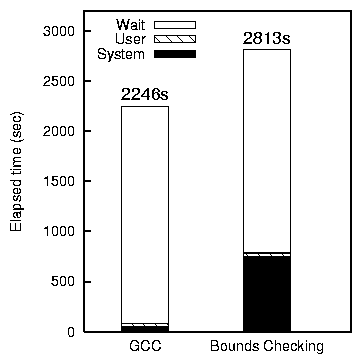

5.1 Bounds checking overhead for ReiserFS with Am-utils and Postmark

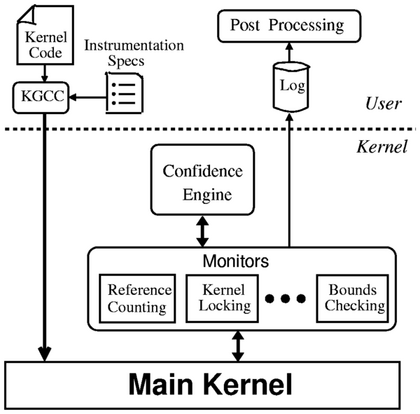

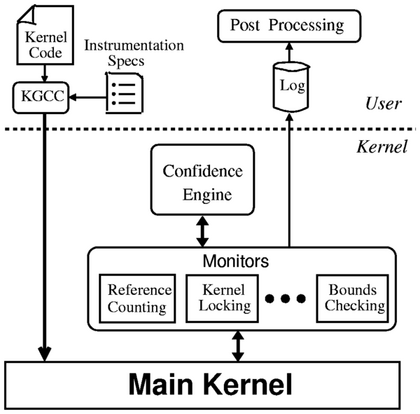

6.1 Architectural overview of the Aristotle system

List of Tables

3.1 Table showing how Cyclone handles various types of errors

3.2 Feature comparison of static checkers

5.1 A two-level classification for runtime bounds-checking technique

5.2 Table showing the differences between capability-based and availability-based runtime bounds checking

\LARGE{{Acknowledgments}}

Acknowledgments

I would like to thank my advisor, Professor Erez Zadok, for his

constant support for this project. The vision of this project

shaped during several insightful discussions with him. Besides,

he taught me several interesting and useful research practices

along the way. Sean Callanan, Radu Grosu, Scotta Smolka, Scott

Stoller, and Annie Liu provided an excellent forum for discussion

and development of ideas related to this project.

My committee members, Tzi-cker Chiueh and Scott Stoller, were

generous of their time and provided helpful input and comments

on the work. Amit Sasturkar and Rahul Agarwal provided valuable

feedback on some ideas in this paper.

I thoroughly enjoyed the company of members of File Systems and

Storage Laboratory, Sean Callanan, Gopalan Sivathanu, Charles Wright,

Avishay Traeger, and Nikolai Joukov during the course of this project.

Kapil Kumar, Mahadev Konar, Susanta Nanda, Sumit Jain, and Mohit Gupta

have made my stay in Stony Brook entertaining and pleasant. And,

friends from A1 are just as cherished today, on a daily basis, as they

were years before.

Finally, I would like to thank my family, papa, mummy, montu,

and didi for everything.

This work was partially made possible thanks to NSF CAREER award

EIA-0133589, NSF award CCR-0310493, and HP/Intel gifts numbers

87128 and 88415.1.

Operating system reliability has become more important than ever.

Users trust their computers with important data, and OS failures can

have catastrophic effects, like data loss, resource unavailability,

and at the very least, frustrated users. Moreover, commodity

operating systems are increasingly being used in enterprise scenarios

to create large scale computing and storage clusters. But commodity

operating systems are still unreliable. Recent techniques for

automated debugging of some commodity operating systems have

discovered numerous bugs [47].

Manual checking of code is not enough. For one, it is both

error-prone and time consuming, and secondly, humans easily get

overwhelmed by complexity. This motivates the need for automation of

the checking process. Moreover, the wide variety of bugs found in

these systems call for innovative techniques for automated bug

detection. Existing techniques can be classified into static methods,

model checking, and runtime checking. In this report we study

applications of static techniques for automated debugging and analysis

of software. In particular, we consider two different ways to apply

static analysis to software. First, we present a survey of static

checking techniques for automated debugging of OS kernels. Second, we

study the use of static analysis and instrumentation for runtime

checking of OS kernels.

Static methods have significant benefits over other approaches to

automated analysis of software. In comparison to runtime checking,

static analysis has three main advantages. First, static analysis

does not need to actually execute the software. Thus bugs can be

discovered and fixed early during the development process. Second,

software that is statically checked does not suffer from the

performance overhead of runtime checking. Third, static analysis

covers all code uniformly; unlike runtime checking techniques, bugs on

rarely executed code paths also get discovered. Although model

checking techniques share some of these merits over runtime checking,

they are rendered effectively intractable for large software systems

due to scalability problems and the lack of a formal specification or

model of the system.

We believe that static analysis of code has great potential in helping

improve software quality. Static checking of code is just one of its

possible applications. Additionally, static analysis of code can be

combined with other techniques like model checking and runtime

checking to produce even more powerful checking systems. For example, one can

first check software statically, and then leave the rest for runtime

verification. Similarly, one can combine static analysis with model

checking to automatically discover predicates using model checking

heuristics and then check them using static analysis [16].

We demonstrate the amalgamation of static analysis and runtime

checking through a memory bounds checking system we have developed for

the Linux kernel. Memory bound overrun bugs often have serious

consequences. They can result in security breaches, program

misbehavior and worse still, they can silently damage the system. It

is all the more acute for OS kernels where memory bugs can lead to

security breaches and permanent data loss.

We have ported two open source memory bounds checking systems into the

Linux kernel, and with the help of static techniques, have

significantly cut down their performance overheads. Additionally, we

have made significant modifications to reduce the number of false

positives, and support concurrency.

The rest of this paper is organized as follows.

In Section 2 we present a classification for bug

detection techniques and synthesize the basic ingredients for static

checking.

In Section 3 we present a survey of a static

checking techniques.

Section 4 motivates the need for automated

deduction of checking rules and presents a quick survey.

Section 6 generalizes static checking and

advocates using it as a means for enhancing the effectiveness of

runtime checking. We present some of our own work in this section.

Section 5 focuses on memory related bugs

in software. We present a survey of existing techniques and present

our own work in this field.

We finally conclude in Section 7 and identify future

avenues of research in this field.

In this section we derive the basic thesis of automated bug finding

in software. We first examine the nature of bugs in systems software.

Based on these bugs, we then present the design space for automatic

static checking techniques with particular emphasis on techniques for

checking large complex software written in C, like OS kernels.

Finally, we compare static checking with other methods of detecting

bugs in software, like formal verification and runtime checking.

2.1 Why Is C Not Enough

Since its very inception, C has been the de-facto language for systems

programming. In fact, systems programming and C have evolved side by

side ever since C's introduction in the 1970s. Like other trends in

computing industry, this was not necessarily designed to be that way,

but this is how things turned out to be. So far, C has suited well

for the requirements of a systems programming language. In general,

any systems programming language is required to provide the following

features.

- Control over low-level data representation

- Explicit memory management

- Performance, and "transparency" of performance: performance

characteristics of a C program should directly manifest in the source

code (e.g., language features like automatic garbage collection make

runtime overhead of a program unpredictable).

C satisfies all these requirements to the extent mandated by

traditional systems. However, as the scale and complexity of systems

increases, systems design as it is known today is getting increasingly

overwhelmed by complexity and bugs. This can be attributed to two

basic deficiencies in the design of the C language.

First, C is too permissive. As a result, compilers cannot catch all

possible bugs. For example, C tries to give programmers much

control over memory layout of data structures; and, it allows them to

control the lifetime of program resources (like memory). This is

partly by design: C does not enforce strong semantics at the language

level (type system). Also, at the time C was conceived, program

analysis was not advanced enough to develop a strongly typed but

flexible language. In any case, the design of C served well in

accommodating a wide range of programming styles and implementation

requirements. But, it also opens up the possibility of programming

bugs. The default type checking done by C compilers is weak and

misses many bugs. It is ultimately left to the developer to exercise

their prudence to produce "good" error-free code.

The second serious drawback of C is its lack of extensibility. There

is a large gap between the semantics of a typical systems program and

the simple rules enforced that the C compiler tries to enforce. As a

result, the compiler is bound to miss bugs. They may not be

"language" bugs, but they are bugs nevertheless.

Consider the following example. Synchronization

primitives are a common ingredient of systems programs. In the

context of the Linux kernel, it is required that the spin_lock

function is called, only when the lock is not already held. Similarly,

the spin_unlock function should be called while the lock is held.

This property can be conveniently depicted using a state diagram

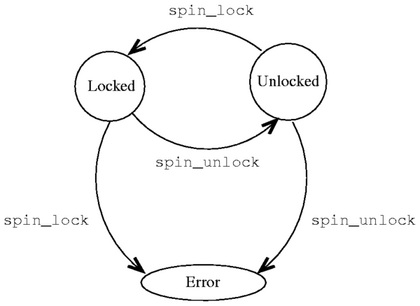

as shown in Figure 2.1.

Figure 2.1: Example of an Application Level Protocol that should be

satisfied in program code. During normal operation, the system can

be in one of two states: Locked or Unlocked. Calls to

spin_lock and spin_unlock cause transitions between these

states. Any other transition results in an error.

However, the C language as such, provides no support for incorporating

application level semantics into compile-time checking. The developer

has to validate higher level properties of their code using

alternative methods.

Code inspection is still the most widely

exercised code checking technique (after compilation). But humans can

easily get overwhelmed by complexity beyond a few hundred lines of

code. As a result, manual checking is error-prone and time consuming.

Moreover, manual checking has poor incremental returns: as different

components of the system evolve and rules and invariants change, it is

quite an art trying to maintain such code. Local changes applied to

only a small part of the program often end up breaking other,

seemingly unrelated parts of the code. Despite all these challenges,

C has no built-in support for language-extensions for checking program

conformance to higher level properties.

Figure 2.1: Example of an Application Level Protocol that should be

satisfied in program code. During normal operation, the system can

be in one of two states: Locked or Unlocked. Calls to

spin_lock and spin_unlock cause transitions between these

states. Any other transition results in an error.

However, the C language as such, provides no support for incorporating

application level semantics into compile-time checking. The developer

has to validate higher level properties of their code using

alternative methods.

Code inspection is still the most widely

exercised code checking technique (after compilation). But humans can

easily get overwhelmed by complexity beyond a few hundred lines of

code. As a result, manual checking is error-prone and time consuming.

Moreover, manual checking has poor incremental returns: as different

components of the system evolve and rules and invariants change, it is

quite an art trying to maintain such code. Local changes applied to

only a small part of the program often end up breaking other,

seemingly unrelated parts of the code. Despite all these challenges,

C has no built-in support for language-extensions for checking program

conformance to higher level properties.

2.1.1 Types of Bugs

It is not possible to give a complete listing, or even a useful

classification of all possible bugs. In this section we discuss some

common bugs and their causes. This discussion will help us classify

bug detection techniques in the next section.

Let us consider a simple bug. The following piece of C code tries to

copy the string "hello world" into the array arr.

fn()

{

char arr[11];

strcpy(arr, "hello world");

}

One can make two observations regarding this code. First, the

allocated space for arr is too small to accommodate the entire

string in it. Secondly, strcpy does not detect and handle this

situation. As is obvious, this operation will cause data to be

written beyond the limits of the array arr. The result could be

a program crash, or misbehavior due to memory corruption, or, no

visible effect at all. One can take two points from this example:

- simple static checking can detect some potentially dangerous

bugs.

- knowledge of procedure interfaces would be useful, e.g., knowing

that strcpy will not check the operation for bounds overflow.

We now present some common types of bugs.

Memory Errors

These are probably the most common bugs in C programs. Memory access

bugs, especially memory buffer overruns have caused much grief to IT

industry. We classify existing techniques for detecting memory bugs

in Section 5. We also consider in detail

the case of runtime bounds checking.

Inconsistent Interfaces

In large complex systems, which have several smaller components making

up the whole system, it is important that individual components have

strictly enforced interfaces. When this is not the case, the software

can behave unpredictably. This problem can be related to the issue of

automatically enforcing higher-level system specifications.

Traditionally, software written in C has not had access to checkable

specifications. For example, Jass or JML for Java.

Violation of Temporal Properties

Imperative programs are essentially temporal artifacts. There is

often a prescribed temporal ordering of operations. For example, lock

before unlocking, etc. Violations of temporal properties can result

in synchronization errors (deadlocks, race conditions), memory leaks,

use after free (dereferencing of pointers which have already been

freed), etc.

Bugs due to Software Development Processes

Software development processes introduce several bugs. One of the

nastier ones is regression bugs. As code evolves over time,

it is more difficult to maintain. First, developers leave and with

them goes ad-hoc undocumented knowledge about the code. Second, as

new code is added, components may get out of sync with each other, or

existing code may be broken. This is very much the case when software

specifications are not tightly coupled with the code, and the

developer is responsible for ensuring compatibility. For example,

checkable interface specifications, or annotation-based systems can

help prevent such regressive digressions (Section

3).

This paper does not address problems arising from incorrect

synchronization in concurrent programs, including problems like data

races, atomicity violations, and deadlocks. These problems have been

extensively studied in literature, and static, dynamic, as well as

hybrid techniques addressing these problems have been developed

[23,30,56,51,52,112].

2.1.2 The Nature of Software Bugs

In recent times, a lot of research effort has been focused at

detecting and studying bugs in operating system kernels. This is no

surprise considering the possible implications of buggy operating

systems. The two leading operating systems of today,

Linux [47,137] and Microsoft

Windows [16] have received much scrutiny from researchers

developing automated static checking systems. Also, these methods

have been successful in finding numerous bugs.

Chou et al. studied of bugs in the Linux

kernel [32]. The bugs they report were found using

their static checking system which we discuss in Section

3.2.5. In particular, they made the following notable

observations about bugs in the Linux kernel which justify some of the

claims we have made above:

- Bug distribution is much higher in device drivers than the core

kernel. This is because the core kernel undergoes much greater

scrutiny and runtime testing than device drivers. However, a buggy

device driver is just as likely to crash a kernel.

- Long and complex functions have a higher number of bugs per line

of code. Thus, programming in C does not handle complexity very well.

- Bug distribution between files is logarithmic: few files have

most bugs while most files have none or few bugs. This is probably

due to the fact that thousands of developers are involved in kernel

development, and they have very different backgrounds-they all not

be trusted with producing completely bug-free code.

- Some bugs in the Linux kernel had lived for a couple of years

before they were detected. This serves to demonstrate that

traditional methods do not achieve good coverage.

Apart from the issue of programming errors, another grossly mishandled

case is the absence of a unified mechanism for graceful recovery from

errors (exceptions). Since error detection is often postponed to

runtime (instead of being pointed out at compile time), the system is

usually not well equipped to handle every possible error case. In the

worst case, this could cause the whole system to crash, leading to

loss of data or service. The cases in which the system misbehaves

without explicitly crashing are equally disagreeable.

The approaches we discuss in this section try to address some or all

of these issues in different ways. Existing methods for detecting or

preventing memory bugs can be classified into language based

approaches, static analysis, and runtime checking techniques

2.2 Bug Detection Techniques

Bug detection is a very broad field. The central thesis of this paper

is that static detection of bugs can be useful; however, they cannot

completely verify large complex systems. For such systems, static

checking is best combined with runtime checking for catching all bugs.

Static checking is useful because the cost of repairing

a bug increases along the software development time-line. An early

discovery can result in a substantial saving. This paper demonstrates

that static analysis can be very useful in these regards.

Of course, it would be a better idea to avoid bugs altogether (better

programming practices); however, in the absence of completely sound

bug avoidance, having redundant checking is better than producing

buggy programs.

Apart from "discovering" bugs, it would be very useful if the bug is

detected as close to its origin in the code as possible.

This makes for easy fixing of the bug. For instance, run-time memory

checking facilities can also detect NULL pointer dereferences.

However, they usually do not provide detailed history of events

leading to the NULL pointer, a good static checker can in many

cases backtrack in its analysis state to provide more useful information.

We now compare static checking with alternative bug detection

strategies for the case of checking OS kernels.

2.2.1 Formal Verification

Twenty years ago, Rushby outlined the difficulties in verifying secure

kernels [108] and suggested a physical separation of OS

components. Neumann et al. built comprehensive

mechanisms for proving the security of the PSOS operating system

[96], a hierarchically-structured OS written in a

strongly-typed language. Later works focused on compartmentalization,

boundary protection, and some verification of OSs such as Mach

[9,62], Pebble [59], EROS

[117], DEOS [101], and Fiasco

[68,124]. In the Kit project, Bevier tried to prove

the correctness of an OS using theoretical techniques [21].

As security concerns grew with the Internet, the U.S. Department of Defense

released The Orange Book [41], which serves as a

"bible" for evaluating secure systems. Arbaugh showed the life-cycle of

system vulnerabilities, emphasizing the importance of early discovery,

disclosure and fixing of bugs, and then releasing patches

[12]. They also showed how important it was for the

security and integrity of computer systems, to be able to verify each

component and layer independently [11].

Model checking of OSs has been used to detect specific problems within OS

components.

Pike et al. used the SPIN [20] verification system to

detect a certain subtle race condition in Plan 9 [103].

More recent efforts seek to extract models from OS source code

[14,17,87,48], with

an emphasis on checking data-independent properties of device drivers

and network protocols, and to apply model checking directly to software

[36,61,121,80,93].

However, model checking techniques do not trivially extend to

operating systems that were not designed with verifiability as a goal

(e.g., Linux). Model checking such a huge system is impractical for

two reasons: (1) lack of a complete model or specification for the

system, (2) state space explosion. Nevertheless, several static

checking techniques use ideas from the model checking world

[16,36,42].

2.2.2 Runtime Checking

Most runtime external checking of systems is incidental. For example,

the Bonnie tool is a program that exercises the memory (VM) and cache

subsystems of the OS by creating very large files and then randomly

reading and writing parts of them [24,35].

Similarly, file-system developers test a new or modified file system

by compiling a large package (such as the kernel source itself) inside

a mounted instance of the new file system

[100,134,136,135].

The POSIX standard specifies a common set of system calls for OSs

[70] and also offers a standard description of how to

test code for POSIX compliance [69].

In the fields of security and intrusion detection, wrappers are used

to guard software interfaces by monitoring inputs, outputs, and

sequences of operations

[126,18,19,114,85,115,133].

Chiueh showed how to protect loadable kernel modules from each other

on x86-based OSs such as Linux [29]. We also propose

to wrap system calls and monitor them for compliance, but our wrappers

are generated from formal specifications of the system-call

interfaces. Wrapper mechanisms with an overhead of no more than

1-2% are possible

[18,19,134,136,135].

Our monitoring is intended not only for security, but for any deviant

behavior.

Runtime checking in tools such as the Bounds-Checking Gnu C (BCC)

[25], Purify [106] and Insure++ [79], perform

runtime monitoring for memory corruptions; e.g., running off of the

end of a string in C. We discuss this in detail in Section

5. Run-time monitoring can also detect

potential race conditions [113] and atomicity

violations [83,52]. Also, some

methods have combined runtime checking with static checking to obtain

robust property checking. Specifically, the static checking

properties validates all that can be verified statically, the

remaining checks are left to be checked at runtime.

2.2.3 Static Checking

The central tenet of static checking is that many abstract program

restrictions map clearly to source code constructs. Static checking

is based on the science of program analysis. Program

analysis offers static techniques for predicting safe and computable

approximations to the set of values or behaviors arising dynamically

at run-time when executing a program on a computer

[97]. However, program analysis-including

finding possible run-time errors-is undecidable: there is no

mechanical method that can always answer truthfully whether programs

may not exhibit runtime errors. When faced with this mathematical

impossibility, the alternative has been to apply certain techniques

that simplify the overall program analysis problem into more tractable

problems. There are four basic approaches to program analysis:

- Data Flow Analysis treats programs as graphs: the nodes

are the elementary blocks and the edges describe how control might

pass from one elementary block to another. For example, for handling

the problem of reaching definitions, the standard approach is

to coin mathematical abstractions for the role of edges in the graph

and solve them using a set of equations. Symbolic execution as used

in PREfix and SLAM (Section 3.2) are types

of data flow analysis.

- Constraint-Based Analysis verifies global properties by

solving a set of local constraints generated from the program text.

Constraint generation from program text has the effect of separating

specification from implementation. Constraints hide implementation

details and pave the way for tractable, structural proofs. There are

two basic constraint theories: data flow analysis and type-inference.

In Section 3.1.5 we present Cqual which is a

constraint-based type inference system.

- Abstract Interpretation (AI) maps programs into more

abstract domains [74,8]. This makes

analysis more tractable and potentially useful for checking. The

theory of AI differs from other approaches, it is a general

methodology for calculating analyses rather than specifying them and

then relying on a posteriori validation. For AI to be effective, it

imposes a constraint on the nature of the abstraction and the

concretization functions. This is called the galois

connection and serves to produce a safe over-approximation analysis.

ASTREE (discussed in Section 3.2.3) is an example of an

AI-based static checker. In Sections 3.2.4 and

4.2, we use predicate abstraction

[110], which is a special form of abstract

interpretation in which the abstract domain is constructed using a

given set of predicates.

- Type and Effect Analysis is an amalgamation of two

ingredients: an effect system and an annotated type

system. The effect system tells the effect a statement's execution

has. The annotated type system provides the semantics for the effect

system. Type analysis is the most commonly used technique enforced by

compilers for static checking (e.g., the gcc -Wall option).

Type inference (Section 3.1.5) is a useful approach. Most

of the checkers discussed in Section 3 use some

form of strong type enforcement.

Despite the apparent differences, these approaches to program analysis

are equally powerful. There is some commonality among these

approaches. The art of program analysis entails cultivating the

ability to choose the right approach for the right task and in

exploiting insights developed in one approach to enhance the power of

the other. Moreover, one can argue that it is not possible to give a

single clear-cut classification of static checking techniques.

This is because (1) there is a significant overlap in different techniques,

(2) seemingly radical approaches are often equivalent in terms of

program analysis power, and (3) designing good static checkers is

increasingly an engineering problem-innovative implementations

often beat more ambitious but less useful ones.

Nonetheless, for the purpose of discussion, it helps to divide

our subjects into a few classes. In Section 3 we

classify static checking techniques into annotation-based methods, and

tool-based methods. Although this may not be the right choice, it

certainly has the advantage of better capturing the programmer's

imagination.

2.2.4 Issues and Trade-Offs in Static Checking

Before we discuss existing static analysis techniques, it would be

useful to get a taste of common engineering issues and

trade-offs in designing static checkers. We qualify these as

"engineering" because they require a deep knowledge of available

information and a creative intuition for effective handling. The

choices made in handling these issues often have little effect on the

"power" of the static analysis as such, but they often are the

deciding factor in the acceptance of the system.

We present below a listing of the issues and trade-offs we identified.

This is not an exhaustive list. Also, they are mostly orthogonal; in

most cases one can realign one trade-off without disrupting others too

much.

- Soundness and Completeness

It is well known that program analysis-including finding possible

run-time errors-is undecidable. When faced with this mathematical

impossibility, the alternative has been to apply certain techniques

that simplify the overall program analysis problem into more tractable

problems. This approximation invariably results in loss of soundness

or precision. Soundness in the context of static checking is defined

as catching all the bugs in the code. Completeness reflects

that all bugs caught by the checker should be true bugs in

the system and not "false positives." We also use the term

precision to describe completeness in this paper. So, if a sound

analysis is desired, precision has to be sacrificed, and vice versa.

In other words, there is always a soundness-precision trade-off. For

example, the PREfix system (Section 3.2.1) sacrifices

soundness for precision. Imprecise checkers usually generate a lot of

false error notices and separating them from real errors is a

time-consuming process. PREfix takes the approach of analyzing only

some program paths, but it analyzes them well, yielding

precise results. This precision comes at the cost of soundness. The

ASTREE checker (Section 3.2.3) which is based on abstract

interpretation allows the soundness-completeness trade-off to be

adjusted by simply controlling a parameter. This is an interesting

and powerful use of abstract interpretation.

- Annotations vs. External Checking

Someone designing a static checker is invariably faced with this

issue regarding what interface to provide to the programmers: code

annotations or external checking. Whereas annotations are applied as

a layer on top of the program code, external checking can directly

apply to unmodified program code. Annotations provide a fine-grained

specification intermixed with program code. Thus it is tightly bound

to the code and can even double up as a protocol specifications.

Consequently, the likelihood of annotations getting out of sync with

the code (e.g., due to regression) is small. Also, we believe that

due to tight coupling between program code and annotations,

annotation-based systems are more capable of attacking soundness than

external checking systems. External checking systems have some

advantages over annotations. First, annotations have poor

reuse: one has to add them to every place in the code where

they apply. External checkers isolate checking specifications into

modular specifications which can then be uniformly applied to

the program code. Second, users may not favor a tight integration

between checking and program code the way annotations do it, because

tight coupling implies difficulty in migration to different checkers

in the form of rewriting effort.

Third, the annotation effort is usually difficult to predict and

measure in advance at the checker-design stage. For example,

consider the Cqual annotation-based checker (Section 3.1.5).

Its designers initially chose annotations believing that the

annotation overhead will be manageable and will give good returns on

the cost of annotating. However, when Cqual was applied to

increasingly complex programs, it was found to yield a large number of

false error notices. As a result, new annotations were added

to suppress false positives. Thus it is difficult to always predict

the amount of annotation effort needed. However, recent research has

been focused at automatic inference of annotations saving the

programmer from the effort. We discuss one such system, Houdini, in

Section 4.3.

- Scalability

Automated bug detection can potentially be most useful for large

complex programs. However, traditional automated checkers can easily

get overwhelmed with the complexity of these programs. The key is to

design scalable checkers. Consider the case of

interprocedural vs. Intraprocedural analysis. Interprocedural

analysis can potentially discover more bugs, and can be more precise

than intraprocedural analysis. For example, 90% of the errors caught

by the PREfix interprocedural checker (Section 3.2.1)

involve interactions of multiple functions. However,

interprocedural analysis can be very expensive in terms of CPU and

memory requirements. Also, interprocedural analysis may scale

super-linearly with program size. Intraprocedural, possibly flow

sensitive analysis is more tractable. One commonly followed approach

is memoization: maintaining "summaries" of analyzed

functions for possible reuse (Section 3.2.5). Summaries make

interprocedural analysis much more tractable. In fact, systems like

Archer (Section 5.2.1) implement highly scalable

path-sensitive interprocedural analysis by using summaries for

analyzed functions. Note that summaries are mostly used in

conjunction with bottom-up analysis so that any function call

can be replaced by a summary for that function.

- Aliasing

Aliasing is often regarded as the bane of program analysis. Combating

aliasing is a recurrent theme of the checkers presented in this paper.

Static analysis systems that aim to give soundness guarantees

(Section 3.1.5) need to make conservative assumptions about

the presence of aliases. This significantly limits the power of

a static analyzer. A common solution is to allow the programmer to

declare a variable as un-aliased in a certain scope, allowing the

analyzer to aggressively infer properties in that scope without

fearing any aliases.

Chapter 3

Static Analysis Survey

In this section we describe a representative set of existing static

checking systems. We place particular emphasis on the scalability and

the usefulness of the checker. Scalability is important because our

ultimate goal is checking large complex software like OS kernels.

Usefulness has many dimensions: the more bugs a checker finds the more

useful it is, fewer false bug reports, and ease of use by the

end-programmer to easily add custom checks. We classify

static checking techniques into annotation based methods, and tool

based methods. Although this may not be the right choice, it

certainly has the advantage of better capturing the programmer's

imagination.

We conclude this section with a quick analysis of how individual

systems compare against each other.

3.1 Annotation-Based Techniques

Program annotations is the term given to specifying behavioral aspects

of code. For example, the const qualifier in C specifies a

behavioral constraint. Program annotations serve as a useful tool for

automated checking of behavioral aspects of programs. Annotations can

also be viewed as a means for augmenting the semantic and behavioral

content of a program, by specifying additional constraints that the

code must satisfy. Thus, annotations help enforce additional semantic

checking of the program over the default checking performed by the

compiler.

In this section we consider a few annotation-based tools for static

checking. Although this is not in any way a comprehensive listing,

still to the best of our knowledge, it is a representative list of the

state of annotation assisted checking in software.

Lint is probably the oldest static checker (other than the language

compiler) that continues to be widely used today. Lint checks C

programs for a small fixed set of syntax and semantic errors. It

performs simple flow sensitive and type aware checking, but can only

detect violations of basic program properties. In fact, Lint is now

essentially superfluous since many of the checks performed by Lint

have been in GCC for a long time now. However, Lint is of historical

value given the set of checkers that were inspired by it, two of which

are the subject of this section: LCLint and Splint.

Lint is a command-line driven tool with command line arguments for

specifying which of the several checks provided by Lint should be

applied to the program. The annotation support in Lint was introduced

for suppressing false alarms.

One additional feature of Lint is its "code portability" check: when

invoked with the -p option, Lint checks the code for possible

portability issues from its dictionary of known portability problems

[122].

LCLint [49,2] grew out of the Larch Project

at the MIT Lab for Computer Science and DEC Systems Research Center.

It was originally named after LCL [123], the Larch C

Interface Language and Lint.

LCL and Larch

The Larch family of languages [65,92] represent a

two-tiered approach to formal specification. A specification is built

using two language: the Larch Shared Language (LSL), which is

independent of the implementation language, and a Larch

Interface Language designed for the specific implementation language.

An LSL specification defines sorts, analogous to abstract

types in a programming language, and operators, analogous to

procedures. It expresses the underlying semantics of an abstraction.

The interface language specifies an interface to an abstraction in a

particular programming language. It captures the details of the

interface needed by a client using the abstraction and places

constraints on both correct implementations and uses of the module.

The semantics of the interface are described using primitives and the

sorts and the operators defined in LSL specifications. Interface

languages have been designed for several programming languages. LCL

[123] is a Larch interface language for Standard C. LCL

uses a C-like syntax.

LCLint

Much of the difference between Lint and LCLint is that Lint's

annotations are related to minimizing the number of false positives,

whereas LCLint's annotations have more features, only some of which

are used for this purpose. LCLint makes extensive use of annotations

to define behavioral aspects of code and to suppress false positives.

LCLint's annotations were originally derived from a subset of LCL

specifications. Although separate LCL specifications can provide more

precise documentation on program interfaces than is possible with

LCLint annotations, LCLint's designers chose the annotations approach

over separately provided specifications for obvious reasons.

LCLint can detect problems like violations of information hiding;

inconsistent modifications of caller-visible state or uses of global

variables; memory management errors including uses of dead storage and

memory leaks; and undefined program behavior. LCLint checking is done

using simple dataflow analyses and is potentially as fast as a

compiler.

LCLint was designed to run frequently on large programs and hence

checking efficiency and scalability were important requirements. As a

result, LCLint employs a number of simplifications for faster analysis

and ease of use. For example, LCLint does not incorporate

inter-procedural data flow analysis, instead relies on programmer

annotations for adjudging the role of function calls, and loops are

considered identical to if statements for the purpose of the

analysis. Also, LCLint focuses specifically on errors at interface

points. Hence, it only uses weak intra-procedural alias analysis that

infers inter-procedural actions from the annotations.

Splint 3.0.1 [3] is the successor of LCLint version

2.5q. The main changes are support for secure programs (hence the

name Splint) and extensible checks and annotations.

User-defined Annotations

Currently, LCLint users are limited to a pre-defined set of

annotations and associated checking. This works well as long as their

programming style is consistent with the methodology supported by

LCLint (e.g., abstract data types implemented by separate modules,

pointers used in a limited systematic way), but is problematic if they

need to check a program that does not adhere to this methodology. For

example, LCLint provides annotations for checking storage that is

managed using reference counting. An annotation is used to denote an

integer field of a structure as the reference count, and LCLint will

report inconsistencies if new pointers to the structure are created

without increasing the reference count, or if the storage associated

with the referenced object is not deallocated when the reference count

reaches zero. If a program implements reference counting in some

other way (for example, by keeping the reference counts in a separate

lookup table), however, LCLint provides no relevant annotations or

checking. More generally, applications have application-specific

constraints that should be checkable statically. These could include

value consistency requirements, event ordering requirements, and

global constraints. Splint provides extensions to LCLint that address

this problem by supporting user-defined annotations. Programmers have

some freedom to invent new annotations, express syntactic constraints

on their usage, and define checking associated with the user-defined

annotation in different contexts.

3.1.4 Extended Static Checking

ESC/Java [54] descends from the intellectual

tradition of program verification. It is an annotation-based static

checker for Java programs that uses theorem solving of program

predicates to check for correctness. ESC/Java is intermediate in both

power and ease of use between type-checkers and theorem-provers, but

it aims to be more like type-checkers and is lightweight in comparison

with to theorem-provers. This way, the designers of ESC/Java hoped to

make it easy to use by the programmers (like type-checkers) rather

than extraordinarily powerful but so difficult to use that programmers

shy away from it (like theorem-provers). Also, hence the name

"extended static checker."

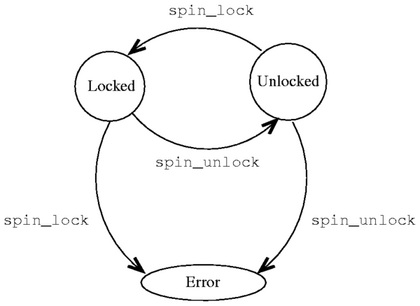

Figure 3.1 shows the intuitive

placement of "extended static checking" between conventional

type-checking and program verification [54].

Figure 3.1: Static checkers plotted along the two dimensions

coverage and effort (not to scale). Extended Static Checking as

implemented in ESC/Java is intermediate both in coverage and effort

needed between type-checkers and program verification systems.

At the time ESC was first proposed [42], powerful

static checkers needed programmer assistance for checking. This is

not in agreement with ESC's goal for ease of use and hence ESC does

not require any programmer assistance while checking.

ESC/Java is designed to scale well with code size. It employs modular

checking. Specifically, when checking the body of a function f,

ESC/Java does not examine bodies of functions called by f.

Rather, it relies on the specifications of those functions, as

expressed by annotations. Hence, ESC/Java can check individual

components of a system separately, leading to good scalability.

ESC/Java supports a powerful specification language. The

specification language is the language used to write ESC/Java

annotations. It is composed of one or more specification

expressions, or pragmas. ESC/Java provides a rich

specification language which revolves around these expressions.

ESC/Java expressions can be arbitrary first order logic formulas.

Also, they can be nested or combined like standard Java expressions,

and are type-checked in ways similar to Java code. However, there are

inevitably some differences between them and standard Java syntax.

Also, ESC/Java specifications can be provided in separate files

(.spec files). This is useful when the Java sources they

annotate are unavailable for use by the checker.

ESC/Java checks the first-order logic expressions using the Simplify

automatic theorem prover [38]. First-order is

logic is undecidable, hence no theorem prover can prove it in

polynomial time. Simplify is a best effort theorem prover and tries

to prove or disprove its input till as long as it can before giving

up.

The operation of ESC/Java consists of the following steps:

Figure 3.1: Static checkers plotted along the two dimensions

coverage and effort (not to scale). Extended Static Checking as

implemented in ESC/Java is intermediate both in coverage and effort

needed between type-checkers and program verification systems.

At the time ESC was first proposed [42], powerful

static checkers needed programmer assistance for checking. This is

not in agreement with ESC's goal for ease of use and hence ESC does

not require any programmer assistance while checking.

ESC/Java is designed to scale well with code size. It employs modular

checking. Specifically, when checking the body of a function f,

ESC/Java does not examine bodies of functions called by f.

Rather, it relies on the specifications of those functions, as

expressed by annotations. Hence, ESC/Java can check individual

components of a system separately, leading to good scalability.

ESC/Java supports a powerful specification language. The

specification language is the language used to write ESC/Java

annotations. It is composed of one or more specification

expressions, or pragmas. ESC/Java provides a rich

specification language which revolves around these expressions.

ESC/Java expressions can be arbitrary first order logic formulas.

Also, they can be nested or combined like standard Java expressions,

and are type-checked in ways similar to Java code. However, there are

inevitably some differences between them and standard Java syntax.

Also, ESC/Java specifications can be provided in separate files

(.spec files). This is useful when the Java sources they

annotate are unavailable for use by the checker.

ESC/Java checks the first-order logic expressions using the Simplify

automatic theorem prover [38]. First-order is

logic is undecidable, hence no theorem prover can prove it in

polynomial time. Simplify is a best effort theorem prover and tries

to prove or disprove its input till as long as it can before giving

up.

The operation of ESC/Java consists of the following steps:

- First, ESC/Java loads, parses, and type-checks the files named

on the command line, as well as any other files needed because their

contents are directly or indirectly used by files named on the command

line.

- Next, for each class whose function bodies are to be checked,

ESC/Java generates a type-specific background predicate encoding such

information as subtype relations, types of fields, etc. in the class

to be checked and the classes and interfaces it uses.

- Next, ESC/Java translates each function to be checked into a

logical formula called a verification condition (VC). As an

intermediate step in this translation, ESC/Java produces a command in

an intermediate language [86] based on Dijkstra's

guarded commands. The intermediate language includes commands of the

form assert E, where E is a boolean expression of the

language. An execution of a command is said to "go wrong" if

control reached subcommand of the form assert E when E is

false. Ideally, when a function f is translated into a command

C and then to a verification condition V, the following

three conditions should be equivalent:

- There is no way that f can be invoked from a state

satisfying its specified preconditions and then behave erroneously by,

for example, dereferencing null, violating an assert pragma,

terminating in a state that violates its specified postconditions,

etc.

- There is no execution of C that starts in a state

satisfying the background predicate of f's class and then goes

wrong.

- V is a logical consequence of the background predicate.

In practice, the translation is incomplete and unsound, so there may

be semantic discrepancies between f, C, and V.

Finally, ESC/Java invokes the Simplify [38]

theorem prover, asking it to prove each body's verification given the

appropriate background predicate. If an attempted proof succeeds (or

if Simplify exceeds specified resource limits in attempting the proof,

or if ESC/Java exceeds specified resource limits generating the

verification condition), then ESC/Java reports no warnings for the

body. If the proof fails (other than by exceeding resource limits),

Simplify produces a potential counterexample context, from which

ESC/Java derives a warning message.

Unsoundness and Incompleteness in ESC/Java

ESC/Java is unsound and incomplete and can hence miss errors actually

present in the program it is analyzing. This is inevitable from

ESC/Java's design, since it is meant to be an extended static checker

rather than a program verifier. However, ESC/Java provides pragmas

for the programmer to control the soundness and incompleteness of

checking. Thus users can balance annotation effort, completeness, and

verification time. Here are some known causes of unsoundness in

ESC/Java (taken from the ESC/Java Manual [76]).

- Loops: ESC/Java does not consider all possible

execution paths through a loop. It considers only those that execute

at most one complete iteration (plus the test for being finished

before the second iteration). This is simple, and avoids the need for

loop invariants, but it is unsound. The users can modify ESC/Java's

treatment of loops by using the -loop command-line option to

specify how many loop iterations should ESC/Java consider. We study

methods for automatically inferring loop invariants in Section

4.2.1. Archer (Section 5.2.1) uses symbolic

execution and heuristics to aggressively unroll loops whenever

possible.

- Trusting Pragmas: The assume, axiom, and

nowarn pragmas allow the user to introduce assumptions into the

checking process. ESC/Java trusts them. If the assumptions are

invalid, the checking can miss errors. For example, ESC/Java depends

on programmers to supply monitored and monitored_by

pragmas telling which locks protect which shared variables. In the

absence of such annotations, ESC/Java will not produce a warning when

a routine might access a variable without holding the appropriate

lock.

- Search Limits in Simplify: If Simplify cannot find a

proof or a (potential) counterexample for the verification condition

for a routine within a set time limit, then ESC/Java issues no

warnings for the method, even though it might have issued a warning if

given a longer time limit. If Simplify reaches its time limit after

reporting one or more (potential) counterexamples, then ESC/Java will

issue one or more warnings, but perhaps not so many warnings as it

would have issued if the time limit were larger. There is also a

bound on the number of counterexamples that Simplify will report for

any conjecture, and thus on the number of warnings that ESC/Java will

issue for any routine. Again, these limits can be set by the

programmer.

- Concurrency: Although we do not consider concurrency in

this paper, it is nonetheless an important topic in program analysis

research. Interestingly, ESC/Java assumes that the value of a shared

variable stays unchanged if a routine releases and then reacquires the

lock that protects it, ignoring the possibility that some other thread

might have acquired the lock and modified the variable in the interim.

Here are some causes and consequences of incompleteness in ESC/Java:

- Incompleteness of the Theorem-Prover: The verification

conditions that ESC/Java give to the Simplify theorem prover are in a

language that includes first-order predicate calculus along with some

(interpreted) function symbols of arithmetic. Since the true theory

of arithmetic is undecidable, Simplify is necessarily incomplete. In

fact, the incompleteness of Simplify's treatment of arithmetic goes

well beyond that necessitated by Godel's Incompleteness Theorem. In

particular, (1) Simplify has no built-in semantics for multiplication,

except by constants, and (2) Simplify does not support mathematical

induction. Also, first-order predicate calculus (FOPC) is only

semidecidable-that is, all valid formulas of FOPC are provable,

but any procedure that can prove all valid formulas must loop forever

on some invalid ones. But it is not useful for Simplify to loop

forever, since ESC/Java issues warnings only when Simplify reports

(potential) counterexamples. Therefore Simplify will sometimes report

a (potential) counterexample C, even when it is possible that

more work could serve to refute C.

- Modular Checking: ESC/Java's use of modular checking

causes it to miss some inferences that might be possible through whole

program analysis. However, the designers chose to retain modular

checking in lieu of its scalability benefits.

Cqual (previously Carillon) [57] is an annotation-based

extension to C that uses the power of flow-sensitive

type-state analysis to catch bugs statically. Cqual is an

amalgamation of ideas from type-checking and constraint-based

analysis. The user extends the language type system with

flow-sensitive type qualifiers, which are atomic properties that

refine standard types similar to standard C type qualifiers such as

const. Cqual checks constraint satisfaction on these type

qualifiers inter-procedurally. Cqual's analysis is sound,

meaning that it does not miss a bug, although it may report false

errors (it is incomplete).

Programmers uses Cqual by specifying two things: they augment standard

C code with type qualifiers, and, identify sub-typing relationship

between the type qualifiers. For example, consider the following

program constraint introduced to prevent format string attacks

[116].

Data entered by a user should not directly be used as argument

to printf since the user may be an adversary.

This constraint can be specified in Cqual by introducing two type

qualifiers for character strings: tainted for strings that could

be controlled by an adversary, and untainted for strings that

must not be controlled by an adversary. The sub-typing relationship

between the two qualifiers is denoted as:

untainted < tainted

meaning that untainted is a subtype of tainted, any piece

of code that accepts a tainted argument can be passed an

untainted argument, but not vice versa.

The sub-typing relation is typically specified as a lattice of type

qualifiers with partial-order relations between the qualifiers. CQUAL

has a few built-in inference rules that extend the subtype relation to

qualified types. Using the extended inference rules, CQUAL performs

qualifier inference to detect violations against the type relations

defined by the lattice. Intuitively, Cqual works by converting a

program augmented by type qualifiers into constraints on type

qualifier compatibility, which can be represented graphically. The

problem of checking correctness is then reduced to graph reachability.

Cqual's analysis employs a unique combination of flow-insensitive and

flow-sensitive analysis. It models program state as an

abstract store, which is a mapping from variables to types

(types contain qualifiers). The effect of a program statement on the

state is emulated as a "Hoare logic" type effect with possible

changes in variable types (ALLOC and ASSIGN

operations). This flow-insensitive phase also includes alias analysis

and effect inference. Following this, the flow-sensitive analysis

phase handles flow-sensitive qualifiers and linearity issues for alias

analysis. Finally, even though Cqual's analysis is not

path-sensitive, it does not suffer from false-positives due to

impossible paths. This is because Cqual's constraint satisfaction

logic tries to find at least one way to satisfy the constraints,

rather than finding all possible violations. However, since it does

not do path-sensitive analysis, the error message may not be as

informative (as in PREfix, for example).

One of the key innovations in Cqual is its practical approach to alias

analysis. The general problem in alias analysis is making updates to

one alias visible to others.

Cqual handles this by adding a layer of indirection between pointers

and their types by introducing an abstract location with each

variable. With this change, a variable is associated with an abstract

location, and abstract stores map abstract locations to

qualified types. Thus a type update to an abstract location is

automatically available to other references pointing to that location.

An abstract location is defined as linear if the type system

can prove that it corresponds to a single concrete memory location in

every execution; otherwise, it is non-linear. Updates to linear

locations can arbitrarily change their qualifiers (strong updates).

Updates to a non-linear location result in a unification of qualifiers

(weak update). Locations may be inferred as non-linear even if strong

updates are desired on them (Cqual is sound). Evidently, strong

updates give better opportunities to test qualifier constraints.

Linearity can be reclaimed by using Cqual's restrict keyword (or

equivalently the confine keyword). The expression restrict

x = e1 in e2 introduces a new name bound to the value of e1.

The name x is given to a new abstract location, and among all

aliases of e1 only x and values derived from x may

be used within e2. Thus, the location of x may be linear,

and hence may be strongly updated, even if the location of e1 is

non-linear.

Cqual's scalable, annotation-based analysis has been applied to large

software including the Linux kernel. It discovered several locking

bugs in Linux [57], and has been used to detect

format-string vulnerabilities too [137]. Cqual is

easy to use and provides strong soundness guarantees. However, there

are two problems with Cqual. Firstly, checking pre-written code with

Cqual needs substantial rewriting effort. Secondly, Cqual's

conservative aliasing assumptions may lead to a high number of

false-positives requiring additional annotation effort to suppress

them (restrict operator). Recently, Cqual has introduced automatic

confine inference by the static analyzer to reduce the burden

of aliasing annotations. In fact, it has been observed to apply to

roughly 95% of pre-written C code [10].

Usability

Cqual's design places strong emphasis on usability. Firstly, due to

its unique combination of intra-procedural and inter-procedural

analysis, Cqual is quite scalable. This is further demonstrated by

the number of bugs it has caught in the Linux kernel (4M+ LOC and

counting). Secondly, Cqual is easier to use than some other

annotation-based systems: it needs less annotation-effort, thanks to

automatic inference of type qualifiers. Also, confine-inference has

been shown to work 95% of the time on pre-written code. Thus, one

does not need to add heavy annotations to enforce linearity. Finally,

Cqual is getting ready for mainstream usage: it currently presents the

analysis results using Program Analysis Mode, an Emacs-based

GUI. However, there are two concerns with Cqual: (1) how much will

the rewriting effort be for porting legacy code to Cqual (a Houdini

[53] like annotation assistant would be useful), and

(2) it is not clear what is the false alarm rate of Cqual's

conservative alias analysis.

3.2 Tool-Based Techniques

Today, commodity-class static-checking technologies are still coming

up and are in a constant state of flux. While it is widely recognized

that static-checking should be integrated into the regular development

process, emerging static-checking techniques have two major drawbacks.

First, they suffer from highly noisy outputs, and second, existing

technologies are still not very mature and continue to evolve.

Consequently, developers tend to shy away from intrusive techniques

like annotations. Tool-based checkers find it easier to be adopted,

even if it is just out of curiosity. Indeed, many tool-based checkers

do not aim for soundness and just try to find as many bugs as possible

which help them achieve mass acceptance.

PREfix [26] is a static-checking tool that was designed to

achieve two main goals. First, PREfix should catch maximum number of

bugs while keeping the number of false positives. This kind of

requirement almost always translates to an unsound system. Second,

and most importantly, PREfix should be developer-friendly,

that is, developers should easily be able to integrate PREfix into

their existing development practices. Although many static-checking

tools cite this as their primary goal, none of them do it as good as

PREfix does. In fact, Microsoft acquired the company that owned

PREfix, and PREfix is now used in-house in Microsoft check code as it

is written on a daily basis. It is also going to be available as a

feature in upcoming releases of Visual Studio. PREfix makes three key

choices to ensure developer friendliness:

- Design a tool-based system rather than an annotation based

system.

- Minimum false positives: False positives are a serious practical

drawback to the mainstream acceptance of any static-checking system.

PREfix designers made minimizing false positives a first class design

goal. Also, it is known that less than 10% of the code of PREfix is

said to concern with analysis per se, most applies to the filtering

and presentation of output, to reduce the number of false positives

[125].

- Provide useful error flow information.

PREfix mainly targets memory errors like uninitialized memory, buffer

overflows, NULL-pointer dereferences, and memory leaks. One main

weakness of PREfix is that in the general case, there is not a method

for programmers to define their own custom checks.

PREfix is a path-sensitive static analyzer that employs symbolic

evaluation of execution paths. Path-sensitivity ensures that program

paths analyzed are only the paths that can be taken during execution,

thus keeping the number of false positives to a minimum. Also,

path-sensitivity helps PREfix provide useful information about the

reported errors. However, path-sensitive analysis can incur a heavy

cost; exponential path blowup due to control constructs and possibly

infinite paths due to loops make it impractical. The solution adopted

by PREfix is to explore only a representative set of paths, whose

number is configurable by the user. This is archetypal of PREfix's

design goals: sacrificing soundness to get maximum errors with minimal

false positives. It is possible to make the system sound, by for

example, applying less heavy-weight analysis on the remaining paths

(e.g., fixed-point based data flow analysis). However, this would be

in direct contradiction with PREfix's goal of keeping the

false-positive count extremely low.

PREfix employs a summary-based bottom-up inter-procedural

analysis:

- Functions are analyzed in bottom-up order in the call graph.

- As functions are analyzed, a summary of constraints is generated

for them.

- Since the analysis is bottom-up, every function call can be

replaced by the callee's summary. Thus a function gets analyzed for

each possible call site that calls it. In other words, the analysis

minimizes the impact of missing summaries for functions yet to be

analyzed.

- Recursion is broken arbitrarily.

PREfix running time scales linearly with program size due to the fixed

cutoff on number of paths. Some other interesting lessons learned from

PREfix are:

- A bulk of errors come for inter-procedural interactions. Hence,

static analyzers should be inter-procedural.

- Good user-interfaces with minimal false positives and visual

traces of error paths are very handy.

ESP is a highly scalable, property verification system for large C/C++

programs. It takes as input a set of source files and a specification

of a high level protocol that the code in the source files is expected

to satisfy. Its output is either a guarantee that the code satisfies

the protocol along all execution paths, or a browsable list of

execution traces that lead to violations of the protocol. ESP is

targeted towards large code bases where it is nearly impossible to

verify system-wide properties manually . Also, in existing large

scale systems, it is infeasible to annotate the code for potentially

checking all possible properties of interest. In addition, protocol

specifications can typically be isolated into separate aspects rather

than be spread apart through out the entire code base. ESP uses these

protocol specifications to check conformance in the code.

The analysis engine of ESP was designed to achieve four goals: it must

not validate any program that contains errors, it must scale to very

large programs, it must report few false errors, and it must produce

useful feedback that allows the programmer to investigate and fix

errors.

ESP achieves these goals by combining two separate phases of program

analysis: Phase one is a global context-sensitive

control-flow-insensitive analysis that produces a call graph and

information about the flow of values in the program. Phase two is an

inter-procedural context-sensitive data-flow analysis that

incorporates a restricted form of inter-procedural path

simulation. The first phase produces the program abstractions used by

the second phase, and also serves as a safety net for the second

phase: it is used to conservatively answer questions about the corner

cases that the more precise (and expensive) analysis in the second

phase cannot handle.

ESP's design reflects the fundamental trade-off between precision and

scalability. Whereas type-inference or data-flow analysis are highly

scalable (due to their unification semantics: program state is unified

at each join point), path-sensitive analysis is not. However,

path-sensitive analysis is more precise since it considers only

feasible paths. Just like PREfix, it is ESP's primary goal to

implement a precise analysis thus keeping the number of false

positives very low while finding a lot of errors. Hence, ESP chose to

retain path-sensitive analysis. Scalability concerns are addressed by

restricting the property space to finite state temporal safety

properties. This was assumed to be an intuitive model. The property

is specified using a finite state automaton (FSA). As the program

executes, a monitor maintains the current state of the FSA and signals

an error when the FSA transitions into an error state. So the goal of

verification is: is there some execution path that would cause the

monitor to signal an error ?

Let us compare path-sensitive analysis with data-flow analysis.

Assume that each of the analyses attempts to verify a certain

property. The fundamental difference between the two is that

data-flow property analysis tracks only the state of the FSA and

ignores non-state-changing code. On the other hand, path-sensitive

analysis symbolically executes the program and can thus track

execution state in addition. As a result, at branch points,

path-sensitive analysis can avoid infeasible paths: it queries the

current execution state, if the current execution state can decide a

branch direction, it is taken. Otherwise, the current state is split

and both branches are processed individually. In comparison,

data-flow analysis would simulate the state along both branches

combining the end result at the join point.

ESP has been successfully used for checking some large software

[91]. ESP has been used to verify certain file

I/O properties of a version of the gnu C compiler (roughly 150,000

lines of C code). ESP has also been used for validating the Windows

OS kernel against a category of security properties.

3.2.3 Abstract Interpretation: ASTREE

Abstract interpretation [74,8](AI) is a

form of program analysis that maps programs into more abstract

domains. This makes analysis more tractable and potentially useful

for checking. ASTREE is a static program analyzer that uses abstract

interpretation to discover bugs. The bugs targeted by ASTREE include

out-of-bound array accesses, integer division by zero, and similar

errors. Additionally, ASTREE checking can also include user-defined

asserts. One restriction imposed by ASTREE on the class of C

programs it checks is that they should not contain any dynamic memory

allocation, string manipulation, and restricts pointer use. This

allows for a fast and precise memory analysis which would not be

possible otherwise. Also, this restriction has not proved to be a

problem for verifying the class of programs ASTREE was originally

designed for: embedded programs as found in automobiles and aerospace.

ASTREE makes certain design choices which can be explained in the context

of safety-critical embedded programs as follows:

- ASTREE's static-checking is sound considering the need to give

correctness guarantees on safety-critical applications. Hence, in the

case of ASTREE, the soundness-completeness trade-off is more in favor

of soundness than completeness.